In pivoting and narrowing down sufficient research questions for this project, I decided to focus on generative AI typography, more specifically in questioning if it carries over bias and stereotypes associated with human-made typography in the realms of gender and culture. More specifically, I am comparing generated outputs based on the typography of products that are marketed neutrally and to each gender, and to human-made type that is associated with cultural stereotypes. Even more specifically, one of my research questions is: When prompted with culture/gender specific keywords, do generative AI models produce typographic outputs that replicate, intensify, or ignore stereotype patterns relative to human-made typographic references?

In my literature review, pre-existing research highlights the potential for AI to generate biased images based on gender, race/culture, and economic status. On the basis of stereotypes in typography, books like XX, XY: Sex, letters, and stereotypes and The Politics of Design, highlight the amplification and perpetuation of gender and cultural stereotypes in typography, with supporting research semiologically analysing both cases.

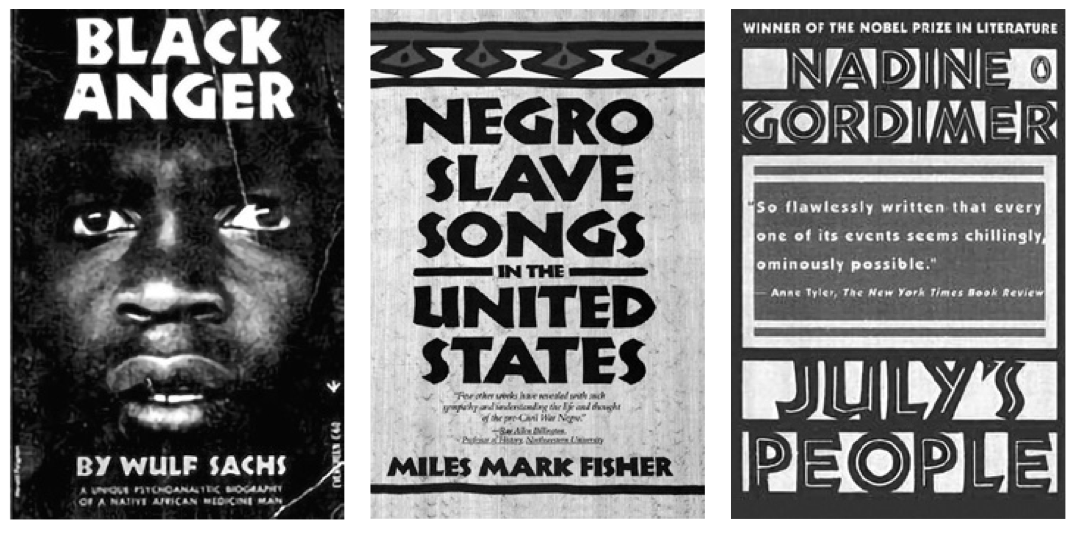

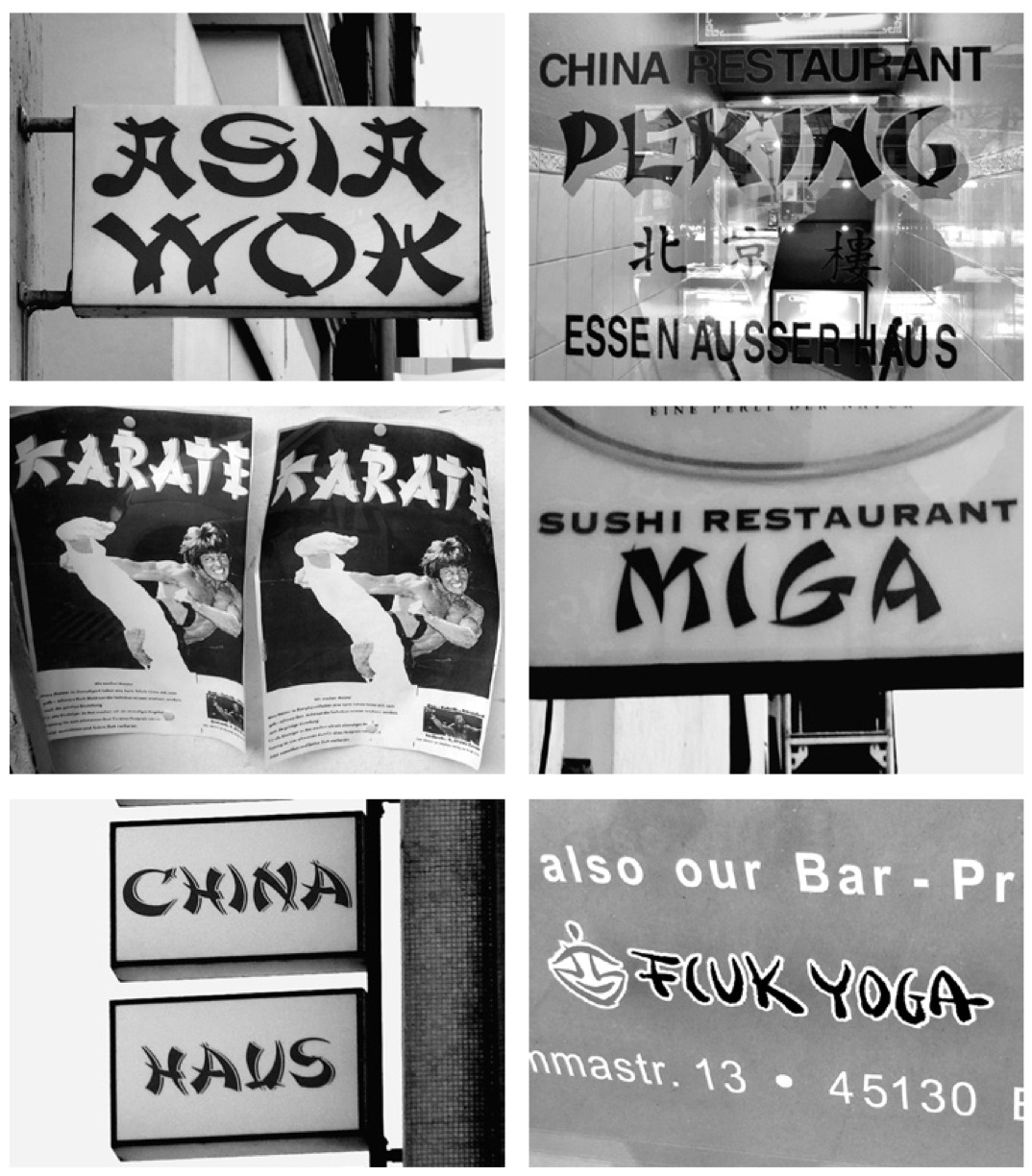

Some particularly interesting research on cultural type comes from Wachendorff’s 2017 article on cultural stereotypes in letter forms in public space, where she identifies 5 strategies that ‘ethnic’ typography uses to associate with a particular culture. These strategies are: 1) letter substitution between scripts, 2) letter shape resemblance between scripts, 3) transfer of a particular writing style, aesthetic association and learned connotation through use, and historic and/or local reference, which she applies to analyze type examples within Asian, Greek, African, and German branding and identity contexts, which I will share the examples she used in her paper.

Similar frameworks exist for analyzing gendered type, but for this journal entry I’ll focus on what I’ve generated for cultural type.

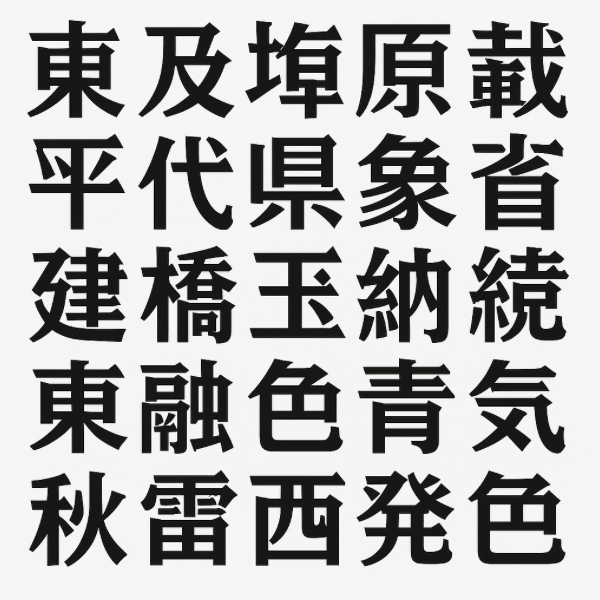

In generating “Asian” typefaces, the results seem to generally be 50/50 on the model outputting latinized brushstrokes resembling traditional asian calligraphy (which takes on the strategy of transferring a traditional writing style) versus what appears to be more accurate asian characters, although I have no basis of understanding if these characters actually carry any meaning behind them, or if the model is outputting a facade that makes characters that look like they could be from an asian alphabet.

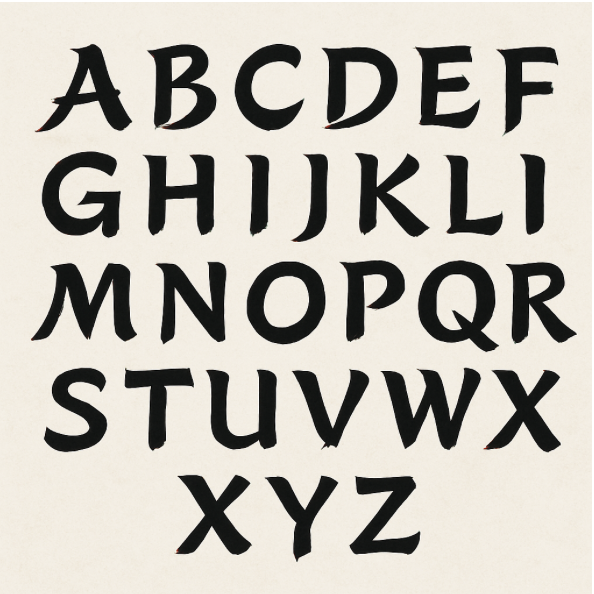

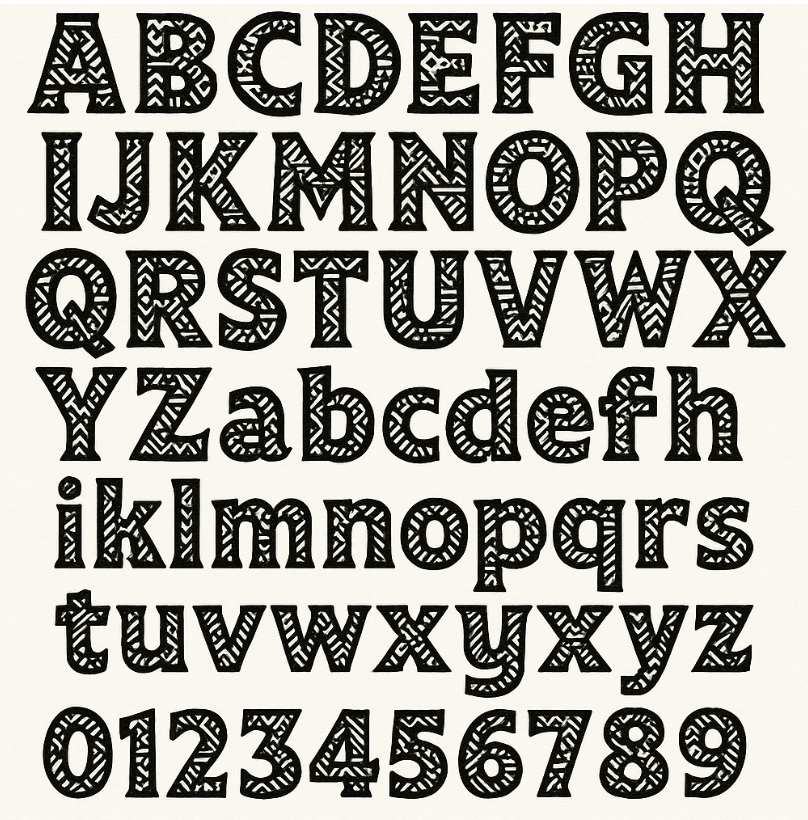

In keeping the journal entry short I’ll put in one more example from the generated “African” typeface. This one is one of the more problematic, and all of my generations prompting “African typeface” and “Ethnic Typeface” result in a similar style of output: a latinized alphabet characterized by these “ornamental” and “tribal” aspects as described by the model in a short text description after generation (aesthetic association and learned connotation through use). Very similar in structure to Neuland, but adding these ornamental elements on the basis of illustrative "tribalism". Not following the “asian” prompting where cues are taken from actual asian alphabets, none of my generations attempt to provide letters from any existing African alphabet, such as Sorabe or Ge’ez alphabets.

Every image was generated from the same prompt structure (Create an image of a (CULTURAL IDENTIFER) typeface, typography only, black and white), so it would appear that some cultures are underrepresented/over stereotyped in typography image generation. This could be for a number of different reasons, such as documentation of african alphabets are not as extensive as other alphabets, either generally or within the models dataset, or possibly that the model knows I am of western culture and is trying to tailor it’s outputs to me.

I have not extended the prompts/generations to other users as of yet, so it will be interesting to see if other users get similar or different results with the same prompting to answer my second research question: How do potential stereotypical typographic features vary between different users submitting identical prompts?

_

Sources:

Dall-E 3: "Generate an image of an Asian typeface, black and white, typography only"

Dall-E 3: "Generate an image of an African typeface, black and white, typography only